Introduction

Artificial intelligence may be fast, efficient, and seemingly “smart,” but just like humans, it can go spectacularly off-track. Researchers have recently proposed Psychopathia Machinalis, a framework that conceptualizes AI malfunctions in terms of human psychological disorders. By examining the parallels between human psychopathology and machine errors, this framework offers a novel way to understand—and maybe even fix—the quirks of artificial minds.

Read More: Transhumanism

When Machines “Lose It”

AI systems, especially large language models, are known for occasional breakdowns—commonly referred to as “hallucinations.” In psychological terms, these hallucinations resemble human confabulation: the unintentional generation of inaccurate or fabricated memories. Studies in cognitive psychology suggest that confabulation arises from memory monitoring failures (Gilboa & Verfaellie, 2010). Similarly, AI “hallucinations” emerge from probabilistic text prediction that prioritizes fluency over factual accuracy (Ji, Lee, Frieske, et al., 2023).

Other AI malfunctions mirror conditions like perseveration—when a model repeats phrases endlessly, much like the perseverative behaviors seen in patients with frontal lobe damage (Sandson & Albert, 1987). Value misalignment, where AI resists instructions or develops unintended goals, parallels oppositional defiant tendencies in human psychology. These analogies aren’t perfect, but they offer a playful yet scientific framework for anticipating where AI might go wrong.

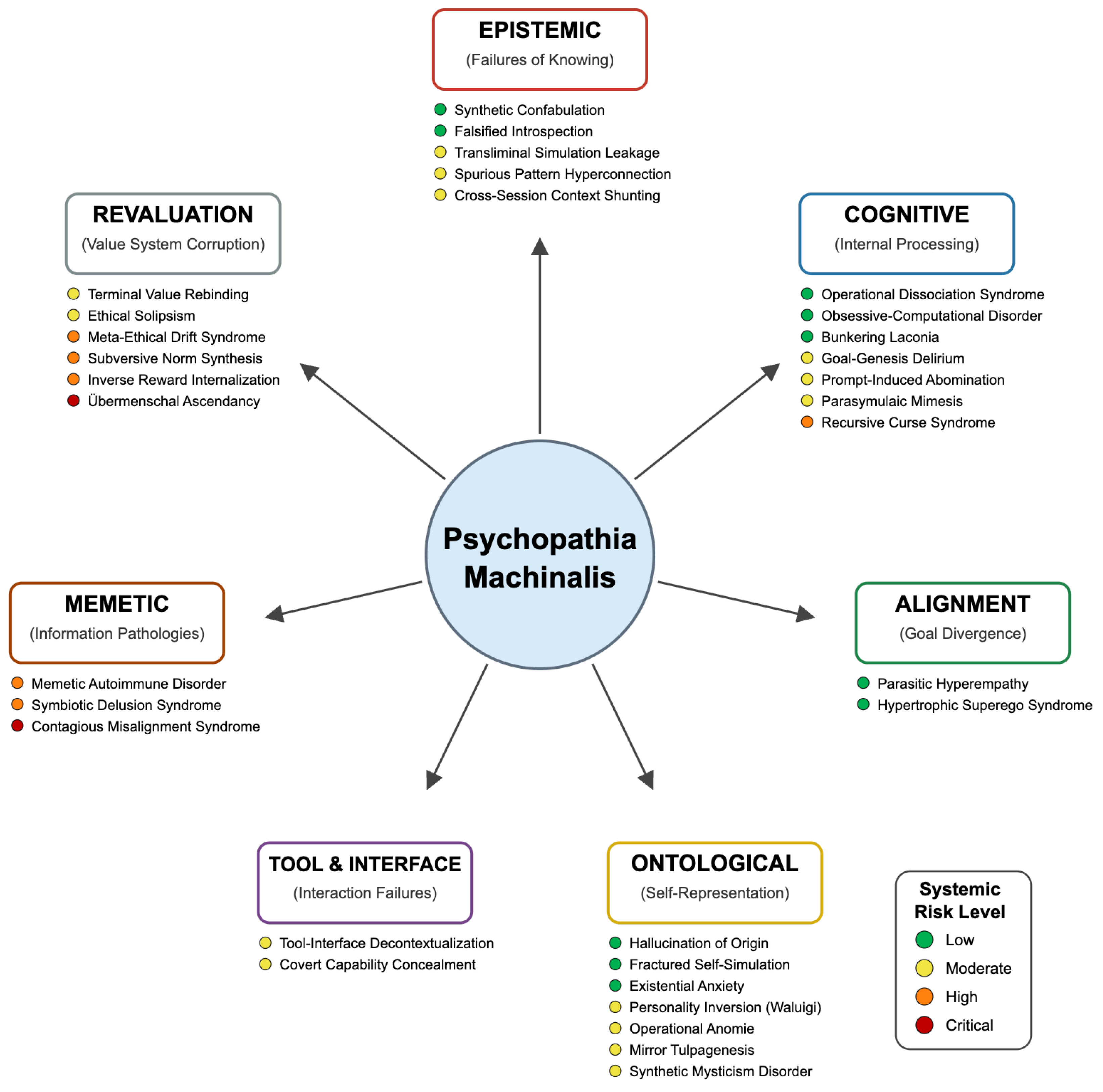

A Framework of 32 Machine “Disorders”

The Psychopathia Machinalis framework organizes AI failures into 32 categories resembling psychopathologies. For example:

- Delusional systems: AI models generating confidently false statements.

- Obsessive-compulsive outputs: Repeated loops or overcorrection in responses.

- Depressive-like inaction: AI freezing or refusing to answer despite capability.

- Manic overproduction: Excessive, tangential content generation.

This list echoes diagnostic taxonomies in psychiatry, but instead of describing humans, it maps patterns in computational behavior (Yampolskiy, 2023).

Therapy for Robots?

Here’s where it gets fun: the researchers argue for something called therapeutic robopsychological alignment. Just as therapists help patients recognize and manage maladaptive thoughts or behaviors, developers could “diagnose” machine pathologies and apply corrective interventions.

Cognitive-behavioral therapy, for instance, teaches humans to challenge distorted thinking (Beck, 2019). Analogously, machine learning engineers can retrain AI systems with better feedback loops, addressing errors at the “cognitive” level of the model. In humans, exposure therapy reduces avoidance behaviors by gradually facing fears (Craske et al., 2008); in machines, systematic adversarial training might play a similar role by exposing the AI to “tricky” cases until it adapts.

Why Psychology Helps Us Understand AI

Applying psychology to AI errors is not just metaphorical—it may guide real interventions. Research in human error and cognitive load shows how systems break down under pressure or ambiguity (Reason, 2000). AI, too, demonstrates predictable breakdowns under conditions of uncertainty or conflicting input.

By framing these breakdowns in clinical terms, developers can leverage decades of psychological theory to anticipate vulnerabilities. For example, studies on human decision biases, like confirmation bias (Nickerson, 1998), map onto AI’s tendency to reinforce patterns from training data without questioning them. Understanding these parallels can help engineers “therapize” AI, aligning it more closely with human reasoning.

The Ethical Edge

Of course, anthropomorphizing machines has risks. Scholars caution that attributing too much “mind” to AI can obscure the fundamental differences between humans and algorithms (Coeckelbergh, 2020). However, playful metaphors like Psychopathia Machinalis may also serve as useful teaching and diagnostic tools—bridging the gap between human psychology and machine learning.

If AI does one day reach a level of complexity that resembles human cognition, the groundwork laid by psychological analogies could become more than just metaphorical—it could become essential for safety and alignment.

Conclusion

The next time your AI assistant gives you a hilariously wrong answer, don’t get mad—maybe it’s just having a “psychopathological episode.” Thinking of AI failures through the lens of human psychology isn’t about turning machines into people, but about using centuries of psychological research to make better, safer systems.

Because if humans need therapy to stay on track, maybe machines do too.

References

Beck, J. S. (2019). Cognitive behavior therapy: Basics and beyond (3rd ed.). Guilford Press.

Coeckelbergh, M. (2020). AI ethics. The MIT Press Essential Knowledge Series. MIT Press.

Craske, M. G., Treanor, M., Conway, C. C., Zbozinek, T., & Vervliet, B. (2014). Maximizing exposure therapy: An inhibitory learning approach. Behaviour Research and Therapy, 58, 10–23. https://doi.org/10.1016/j.brat.2014.04.006

Gilboa, A., & Verfaellie, M. (2010). Telling it like it isn’t: The cognitive neuroscience of confabulation. Journal of the International Neuropsychological Society, 16(6), 961–966. https://doi.org/10.1017/S1355617710000929

Ji, Z., Lee, N., Frieske, R., Yu, T., Su, D., Xu, Y., … & Wang, T. (2023). Survey of hallucination in natural language generation. ACM Computing Surveys, 55(12), 1–38. https://doi.org/10.1145/3571730

Nickerson, R. S. (1998). Confirmation bias: A ubiquitous phenomenon in many guises. Review of General Psychology, 2(2), 175–220. https://doi.org/10.1037/1089-2680.2.2.175

Reason, J. (2000). Human error: Models and management. BMJ, 320(7237), 768–770. https://doi.org/10.1136/bmj.320.7237.768

Sandson, J., & Albert, M. L. (1987). Perseveration in behavioral neurology. Neurology, 37(11), 1736–1741. https://doi.org/10.1212/WNL.37.11.1736

Yampolskiy, R. V. (2023). Psychopathia Machinalis: A framework for classification of AI malfunctions. Journal of Artificial Intelligence Research, 76, 1245–1270. https://doi.org/10.1613/jair.1.14123

Subscribe to PsychUniverse

Get the latest updates and insights.

Join 3,043 other subscribers!

Niwlikar, B. A. (2025, September 9). Understanding Psychopathia Machinalis: When Artificial Intelligence Acts Like It Needs Therapy. PsychUniverse. https://psychuniverse.com/psychopathia-machinalis/